◀ Back to LuminAR

A Usability Test of M2 Interactive

Findings

The responses from and observations about each participant were recorded. The information was then collated and assessed for commonalities. We discovered several common usability issues with the M2 Interactive app, as well as some issues unique to some participants.

Participant Responses & Observations

Observations about the participant's experience were recorded, as well as the answers to 10 standard questions. Each participant had the opportunity to respond to any observations and their final thoughts were captured. The identity of the participants has been redacted in the documents below to maintain their privacy.

| Participant 1 | Participant 2 | Participant 3 | Participant 4 | Participant 5 | Participant 6 |

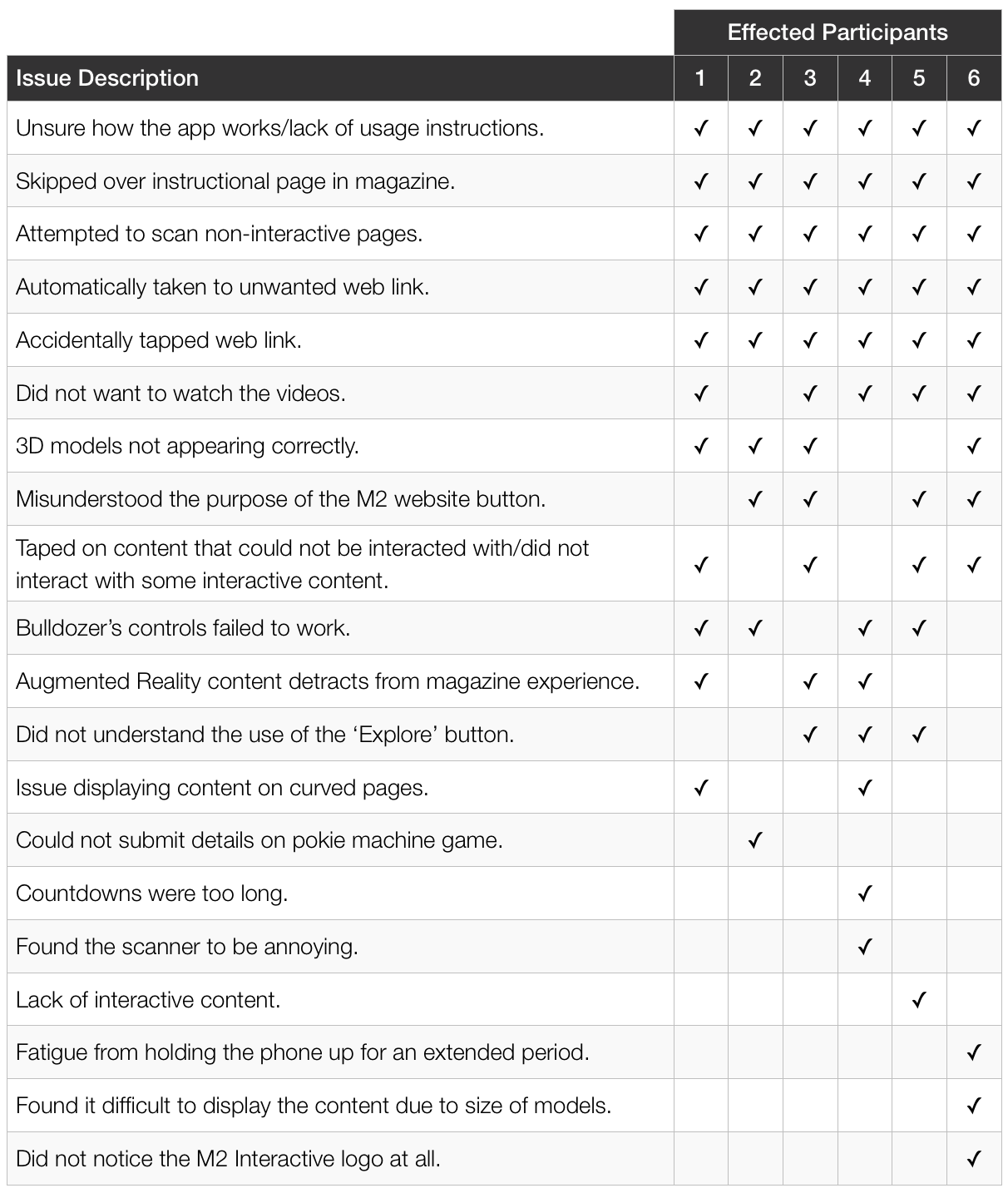

Usability Issues Experienced

Below is a list of issues experienced, along with a list of effected participants. The list is ordered descending

by the number of effected participants, with the more prevalent issues displayed at the top of the list.

Notable Trend

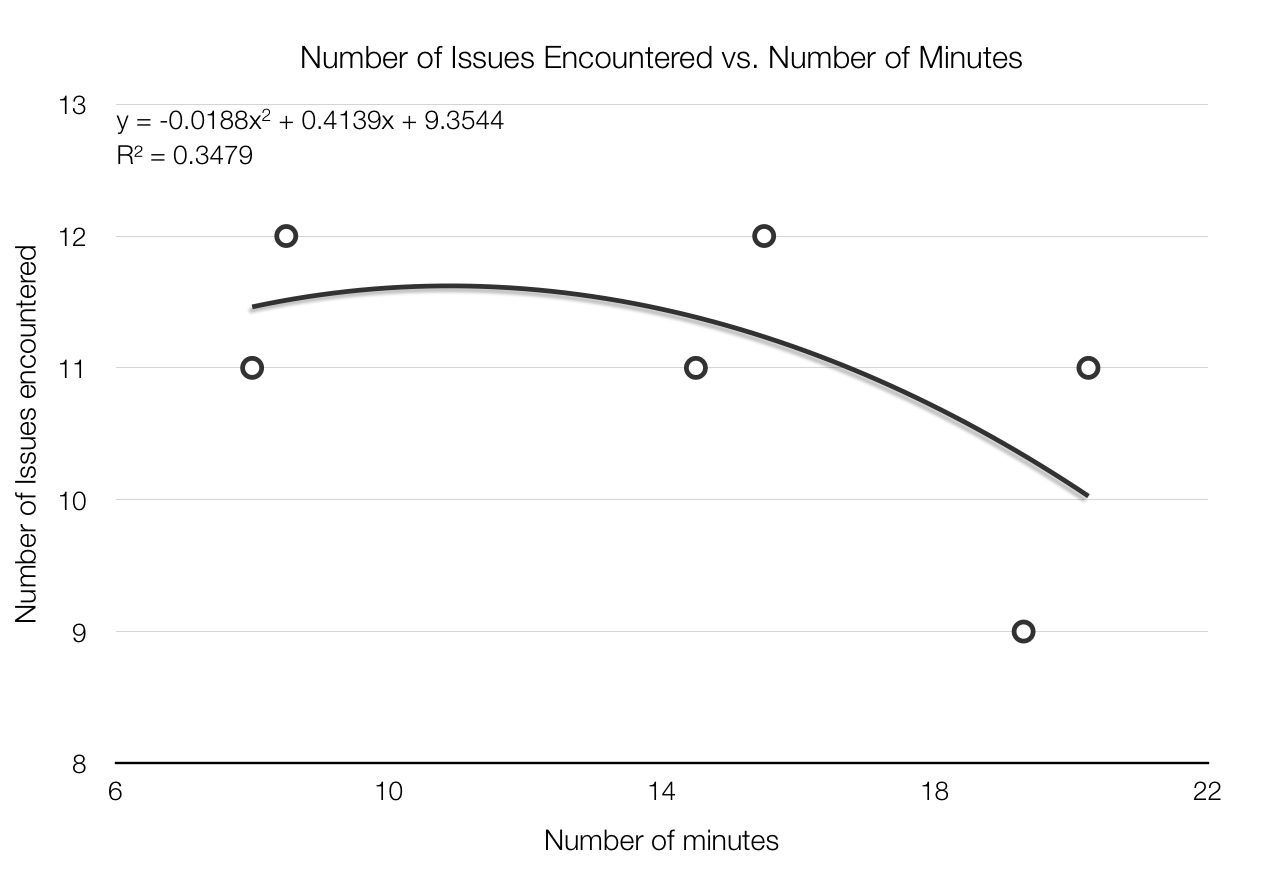

By plotting the number of issues a participant encountered against the number of minutes they spent in the

application a negative, Polynomial trend can be observed. This trend suggests that users that spent more

time in the application encountered less errors.

It is suggested that perhaps some of the issues encountered by the participants are caused by user-error,

resultant from the user not spending enough time in the application to execute the tasks in the way they

were designed for.

Common Usability Issues

Of the twenty different issues experienced across the six participants, five issues were experienced by

all participants during their use of M2 Interactive.

All users skipped over the instructional guide on page 8 of the magazine, and were unsure how to

correctly use the application. As the app does not include an on-app user guide, every participant

learnt how to use the app by trial-and-error. During their learning experience, every participant

attempted to scan non-interactive pages of the magazine. They also accidentally tapped on a web link

button, and were all unwillingly taken out of the app and into the web browser.

Five of the six participants did not wish to watch the videos, as they were uninterested in this type of

media. Four users experienced issues with 3D models not appearing correctly, and two of those participants

found the curve of the page to be partially responsible. Four users misunderstood the meaning of the M2 website

button, and four users either attempted to tap on non-interactive content, or did not know to tap on interactive

content.

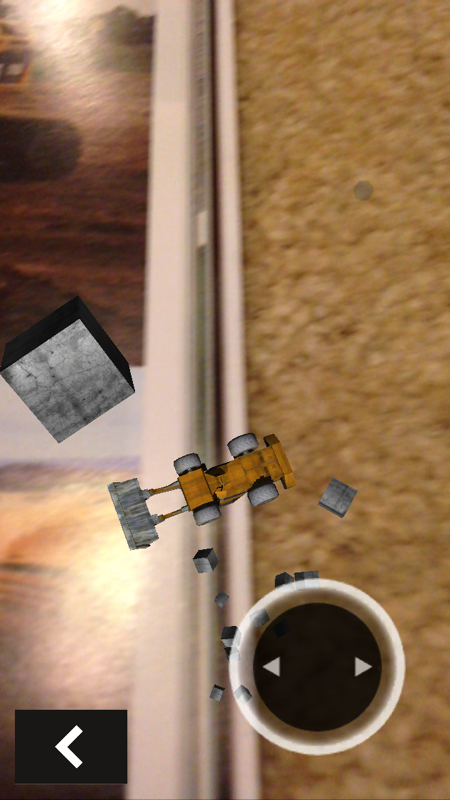

Three participants were unsure about the purpose of the ‘Explore’ button; this includes the single

participant who had experienced Augmented Reality previously. All participants that attempted to play

the bulldozer game were unable to due to faulty controls.

|

|

|

|